Bait taken. I was quickly in Max and testing out live.step, and it was true - you couldn't Apple/Cntrl-M map a MIDI controller to the sliders that control the pitch, velocity, etc. in live.step. Note that the intended usage for live.step is that you use the mouse to set the sliders, and then map the output to a parameter in another device. This was different - controlling the sliders themselves in live.step with a MIDI Controller. Challenge accepted.

Not using live.step was going to be interesting. Back to first principles...

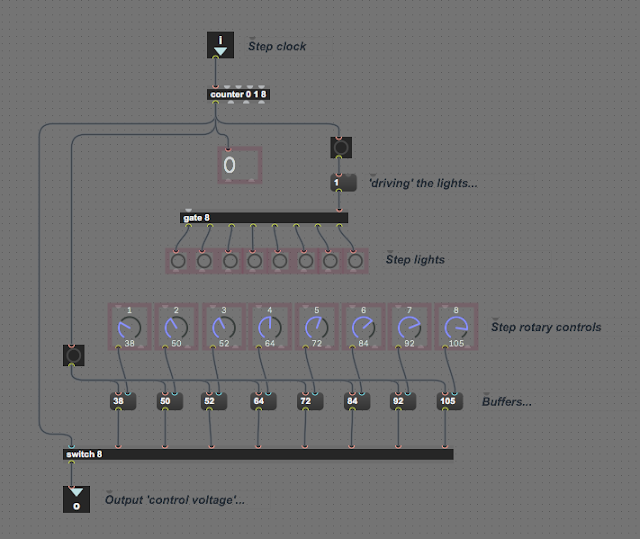

The result is MIDIdifferentTWO, which is, of course, different to MIDI differentONE! TWO is deliberately 'old-school' in design, with rotary controls and big lights for each step, and is built without using live.step. So there's a classic 'counter' object to generate the steps, the rotary controls are scanned using an 8-way 'gate' object, and the lights are the standard 'blink' / 'bang' buttons. driven by a 'switch 8'object. To complicate matters, the 'gate' object allows values to pass through it, but you have to send the value, and a rotary control only outputs a value when you change it (or bang it). My attempt at a solution was to use a 'message' object as a buffer between the rotary control and the 'gate' object, and this seems to work quite well, at the cost of quite a bit of wiring up of bangs...

I was wondering if I should use the buffer contents to replace the parameter value of the rotary controls, but decided in the end that this wasn't required. I also rejected the idea of using the buffer as the map target for the Ableton 'remote control' system 'control voltages', so that I could have the rotary controls set as 'hidden', but I'm leaving this as an option depending on feedback. The Ableton M4L Guidelines for hiding objects so that they don't overwhelm the Undo history seems to be incompatible with making controls map targets, so this design has some flexibility in terms of possible mitigations.

The Max For Live code above is simplified, and there's a missing connection! The step clock output from the counter should be connected to the left-hand input of the 'gate 8' object - but you can always look at the real code if you download the amxd from MaxForLive.com.

The initial design was just a rapid response to a Facebook 'Max For Live Users' group query, and so was me trying to see if I could make do without live.step. The result then went through several drafts to the latest release (so far) of 0.06, adding direction to the steps (the counter object makes this easy, shuffling of the order of the steps (via a look-up table), skipping of steps (using the pack object to remove/restore numbers in the look-up table, and adding a second channel synchronised to Live's transport. Having two channels, where one can be free-running (or driven by MIDI events in a clip, which probably counts as user-controlled-sync!) and the other is locked to the DAW transport gives two very different, contrasting sources of 'control voltages', plus it also looks good. For a single channel 8 step, un-synced step sequencer, then I might have been tempted to put the rotary controls in two rows to reduce the width of the device (M4L Guidelines again!), and I might even have thrown away the 'old school' look and used sliders, but two channels means that the width is going to be wide anyway, and stacking two sliders vertically doesn't work for me.

Implementing the 'Skipping' of steps required additional map targets for each step, and I was thinking of a Novation Launch Control (or XL) when I was doing this, but there are lots of other MIDI Controllers available. The solution was to just use a toggle text object as the map target... One complication in the Max For Live coding was how to deal with the number of steps when you could skip or un-skip steps, but once again, the 'counter' object makes changing the count maximum easy, and so I just used the look-up table length as the counter maximum, and it was sorted! I'm always intrigued by how some problems loom ahead as being major, maybe insurmountable challenges, but when you actually start to code them, they kind of shrink and aren't as impossible as you anticipated. There again sometimes apparently simple things can take ages to figure out, so there's no certainties!

The blinking lights proved to be one of the most challenging things to get right... The 'button' object has a parameter called 'blinktime', which controls how long the light stays on once it gets a 'bang' signal. Unfortunately, when the rate at which you scan across the steps changes, then blinktime needs to change as well - ideally so that there is no overlap with other steps so two lights are both lit at once, and without any gaps where no light is lit at all. This turns out to be difficult! Now if the 'button' object would just light up for the whole of the time that a step was active, like an LED or even a bulb, then it would be easy. But initially I didn't want to mess about making a custom graphic object like that, although eventually I decided that I had to, and here's what I did...

I wrote a special object just to decode the step number to drive scrolling lights! As often happens with these things, (and as noted above!) when you sit down to do it, then it isn't as difficult as you expected. It turned out to be nothing more than a set of compares, but instead of using the 'button' object as the indicator, I used the live.toggle object, which shows one colour when you send it a one, and another colour when you send it a zero. So now the steps are shown by an indicator that lights up for the whole of that step, and is off the rest of the time. Neat and much better than my previous default indicator: the 'button' object. Sometimes being forced into a change is good for you...

Notice that this time the missing connection is missing no longer! So what do the comparisons look like inside the scroll_mr package?

As I said, all a bit obvious really. But it works very nicely!

Using MIDIdifferentTWO

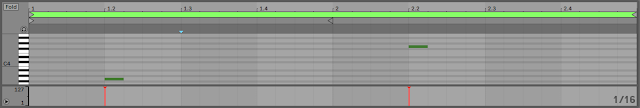

For something that started out as about half an hour's coding in response to a Facebook query, the final result (so far) has quite a lot going on! So, as usual, here's a side-to-side detailed descriptoon of all of the controls and what they do:First, notice that there are two separate step sequencers. The top one can be either free-running (with its own clock running at the 'Rate' speed when the 'Mode' selector is set to '=Not Synced=' (I'm never sure if there should be an 'h' in synced/synched...), or else triggered by one of five different MIDI Events from the clip in Ableton Live: Any MIDI Note, Any change of MIDI Note Number (so repeated notes trigger the step advance the first time, but not after that), Note number 0 (very low frequency!), Note Zero with a MIDI Velocity of zero (the lowest, quietest note in MIDI 1.0!), or any note with a MIDI Velocity of 1 (the quietest note in MIDI 1.0). Because these MIDI Events are in the clip on the track in Ableton Live, then they are synced to Live's transport, but there's nothing to stop you having all sorts of weird timing of those notes, and don't forget the 'ignore repeated note' mode. The lower step sequencer is always synced to Live's transport, but you can choose anything from the step advancing every 8 bars to every quarter beat, which is quite a big range.

Both sequencers have the same controls after the speed/sync section. After the step number and a little count-up indicator, downwards there is the Direction control, which allows selection of left-right (ascending through the numbered steps), right-to-left (descending), and back & forth (palindrome mode, as some say). Underneath are two tiny toggle buttons. '1-8' and 'Skips' forces the full 8 steps when it is showing '1-8', whilst in 'Skips' mode the step numbers can be clicked so that they turn into 'X's, and then that step will not happen (and the length of the sequence will be shorter). Sequences that are one step long are okay, but they aren't very interesting! As you click on the step numbers to change them to the 'X's, then you will see that a row of tiny numbers will change to show the missing number. The 'Shuffle' button changes the order that the sequencer plays the steps - and again the row of tiny numbers will change to reflect the new order. Each time you get the 'Shuffle' button then the order will change. The lowest controls are nudge '+/-' buttons for the sequence length, shown as a small blue number on the left side. The step length automatically changes when you set up skips.

The central section is 'old-school': rotary controls for setting the step values, and big indicators to show which step is playing. The modern twist here is that the step numbers (in the grey squares) can be used to skip steps, but there's another hidden twist - you can control the rotary controls and the skip buttons with Ableton Live's 'remote control' 'control voltage' system. To do this, you either use the Map button in an LFO or other device, and then click on the rotary control of the grey step number square in MIDIdifferentTWO, or you put Ableton Live into MIDI Learn mode (Apple/Control-M to get into the 'blue' mode), then click on a rotary control or a grey step number square and move a slider or press a button on an external MIDI Controller. If you did this is the right order then the rotary control or step number will show an indication of the note number of MIDI controller that you have mapped to that control in a small grey box, and a line will appear in the 'Mapping' table at the upper left of Ableton's screen.

For testing, I used a Novation Launch Control to control the skips:

In the photo above you can see that the four lit buttons on the Novation Launch Control have turned steps 5,6,7, and 8 on the upper sequence into 'X's, and so those steps will be skipped. Also note that the sequence length has changed to 4 steps (the little number on the left). When I took the phot I was just about to map the rotary controls on the Launch Control to the rotary controls for the step values in MIDIdifferentTwo, so that I could control the sequence from the external MIDI Controller. You could, of course, use just about any MIDI Controller to control the sequencers inside MIDIdifferentTWO... Using an external MIDI Controller like this crosses the line from DAWless to 'DAWed', of course, but using a MIDI Controller definitely looks 'DAWless'!

After the eight sets of step controls, the section on the right hand side deals with the output values of the step sequencers. The large blue numbers are the current output value - there's a label that says so! Underneath this is a 'Normal/Invert' toggle button, which inverts the value (so 127 becomes zero, and zero becomes 127). Next on the right are three rotary controls. 'Offset' adds to the value of each step, and can be used as a way to shift all of the values at once. If you are controlling a filter with the step sequencer, then this would behave just like the cut-off frequency control in the filter, for example. The 'Depth' rotary control scales the step values. At zero it scales the values down to nothing, so you won't hear any effect. At 100% the output is the values shown on the rotary controls. At 200%, the output is scaled to twice the values shown on the rotary controls - which means that the output value may well 'max out' at 127! The final rotary control is the 'Smooth' control, which is like the 'Slew' control on modular synths, and it turns abrupt jumps of value into more gentle slower 'slides' - it 'smoothes' the output!

Finally, there are two 'Map' buttons and their inverses, 'Unmap' buttons. You use these to map the output values of the two step sequences to other instruments, effects, or utilities inside Ableton Live. Controls that are being controlled generally go grey to indicate that they are being controlled from somewhere else (LFO, MIDI Controller, etc.), and their value moves on its own! To unmap and select another control, you use the 'Unmap' button.

Sinking and sourcing?

These are electronics terms for outputs and inputs respectively. In lots of electronic interfaces, an output is a source of current flow (it 'sources' current is the colloquial phrase), and an input is a sink of current flow (it 'sinks' it is the colloquial phrase). So for the MIDIdifferentTWO device, the step rotary controls and the grey step number squares are sinks, and an LFO or MIDI Controller that is controlling it via Ableton's 'remote control' control voltage' system would be a source. At the output of MIDIdifferentTWO, the two big 'Map' buttons are sources, and whatever they control would be sinks. Jargon, that's all.In use

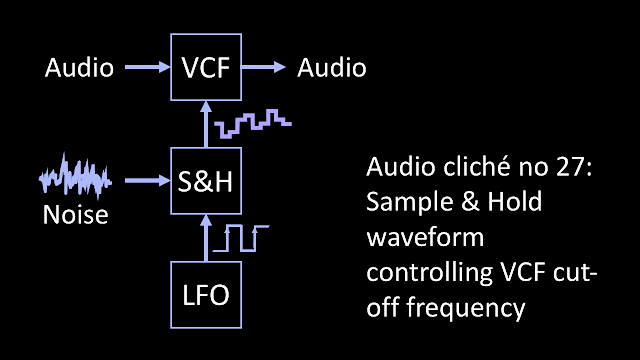

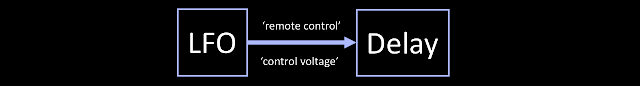

One thing to try is to change the cut-off frequency of a filter (a well-worn cliche that you can also do with the stock/factory 'Auto Filter' effect), or change the time delay of Delay (there isn't a stock/factory effect that does this!), or change the Depth in the Saturator effect to give an interesting rhythmic 'bite' variation. Basically, whatever your favourite 'control to tweak' is, you can now apply a shimmering, rhythmic version of it automatically, and free up that hand for something else, like pitch bend, or a mod wheel, or mousing, or adjusting knobs on outboard gear, or anything else. Now I know that I already have a device called '3rd Hand' (look it up on MaxForLive.com), but this is a bit like having a third hand!

Getting MIDIdifferentTWO_mr

You can get MIDIdifferentTWO_mr here:https://maxforlive.com/library/device/6160/mididifferenttwo

Here are the instructions for what to do with the .amxd file that you download from MaxforLive.com:

https://synthesizerwriter.blogspot.co.uk/2017/12/where-do-i-put-downloaded-amxd.html

(In Live 10, you can also just double-click on the .amxd file, but this puts the device in the same folder as all of the factory devices...)

Oh, yes, and sometimes last-minute fixes do get added, which is why sometimes a blog post is behind the version number of MaxForLive.com...

Modular Equivalents

In terms of basic modular equivalents, then implementing MIDIdifferentTWO_mr is just two step sequencers, giving an ME of 2. The ability to control step values and skips may vary with the specific sequencer, but if implemented, then it is just more patch cables. Perhaps MEs should also include some sort of measure for the number of patch cables that are required?---

If you find my writing helpful, informative or entertaining, then please consider visiting this link: